Laxis - Prospect Research

Overview

Connecting Insights to Action: Designing Personalized Outreach at Scale

Crafting an effective sales pitch often requires hours of prep work to pulling research insights into the content. To help users spend less time preparing, and more time on connecting with customers, our team decided to refine the experience of creating content for the outreach campaign.

MVP of Prospect Research AI Agent released

2025-06

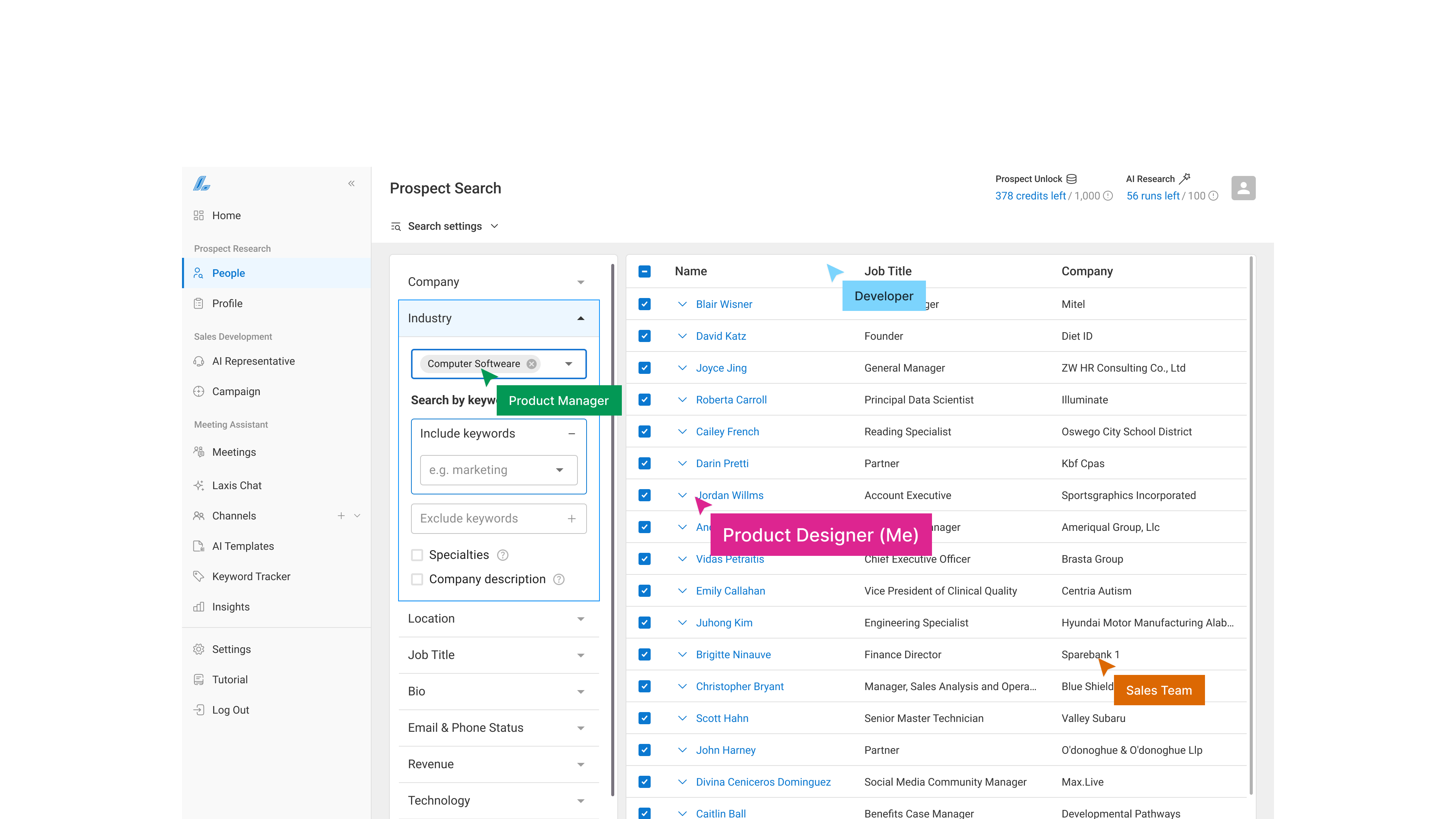

Unlock the Power of Intelligent Customer Research from 700M+ B2B Database

View Project

This case showcases how I refined the experience that enables users to apply the research insights directly into their message drafts — turning ideas into persuasive pitch.

Launch

2025-10

Improved Performance

>10%

Outreach reply rates

Consistent Deals

30%

Positive response rates

The Context

Why the Cold Email Didn’t Work

Laxis helps users save time by drafting and sending sales emails automatically. However, without the right context, many AI-written messages can feel impersonal, generic or irrelevant. It didn’t convince audiences why should they care.

Across 28M+ emails, it takes roughly 344 messages to earn a single meeting—just 3 wins for every 1000 attempts.

The Challenge

How might we transform AI-generated generic messages into personalized product pitch driving stronger engagement?

Discovery

Comparative Message Analysis (Content Audit)

What Good Personalization Looks Like

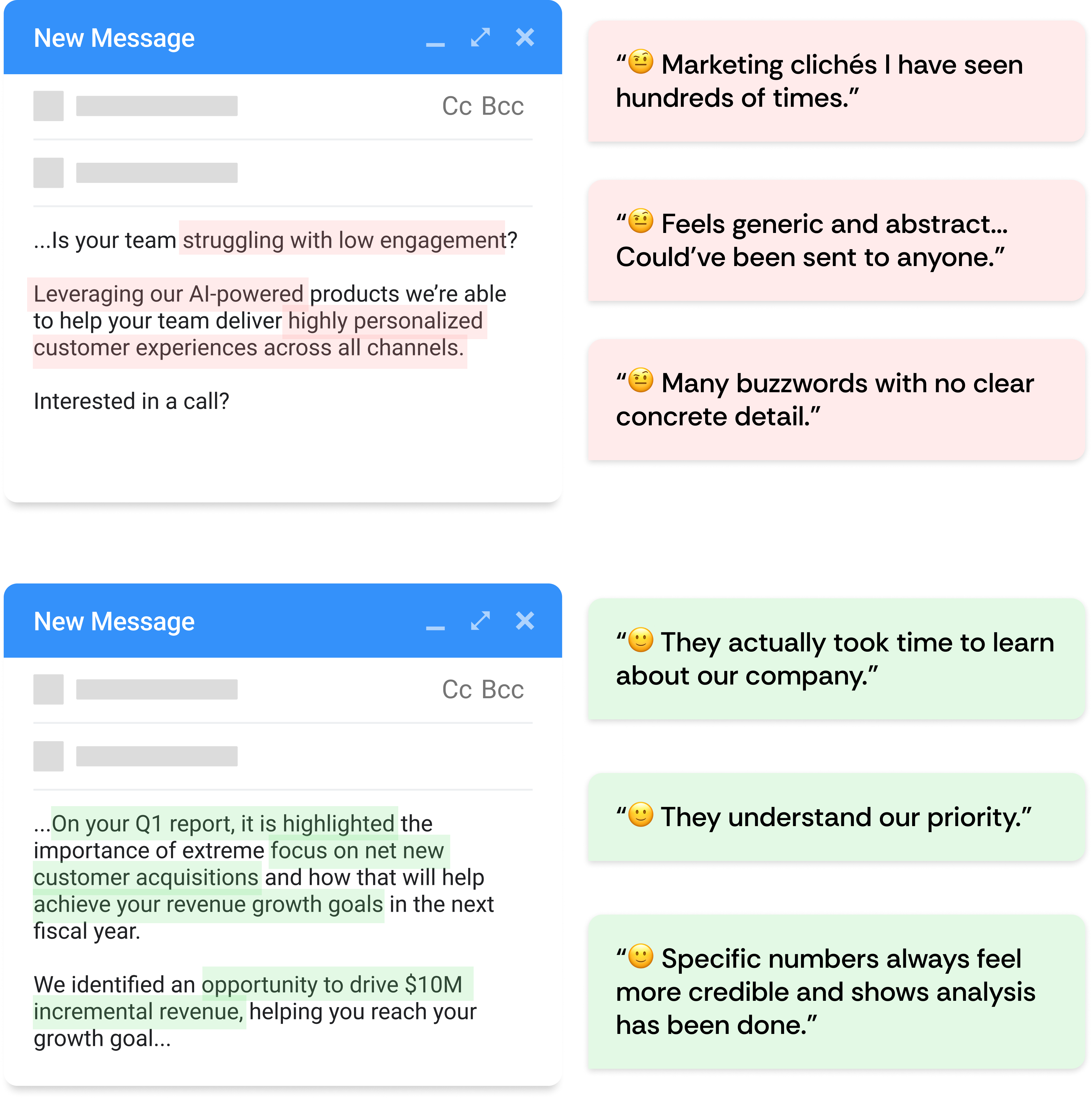

To define what effective personalization means for our users, I conducted a Comparative Message Analysis—testing two versions of the same outreach email sent to C-level executives.

Task Analysis

Disconnected Workflows, Manual Chaos

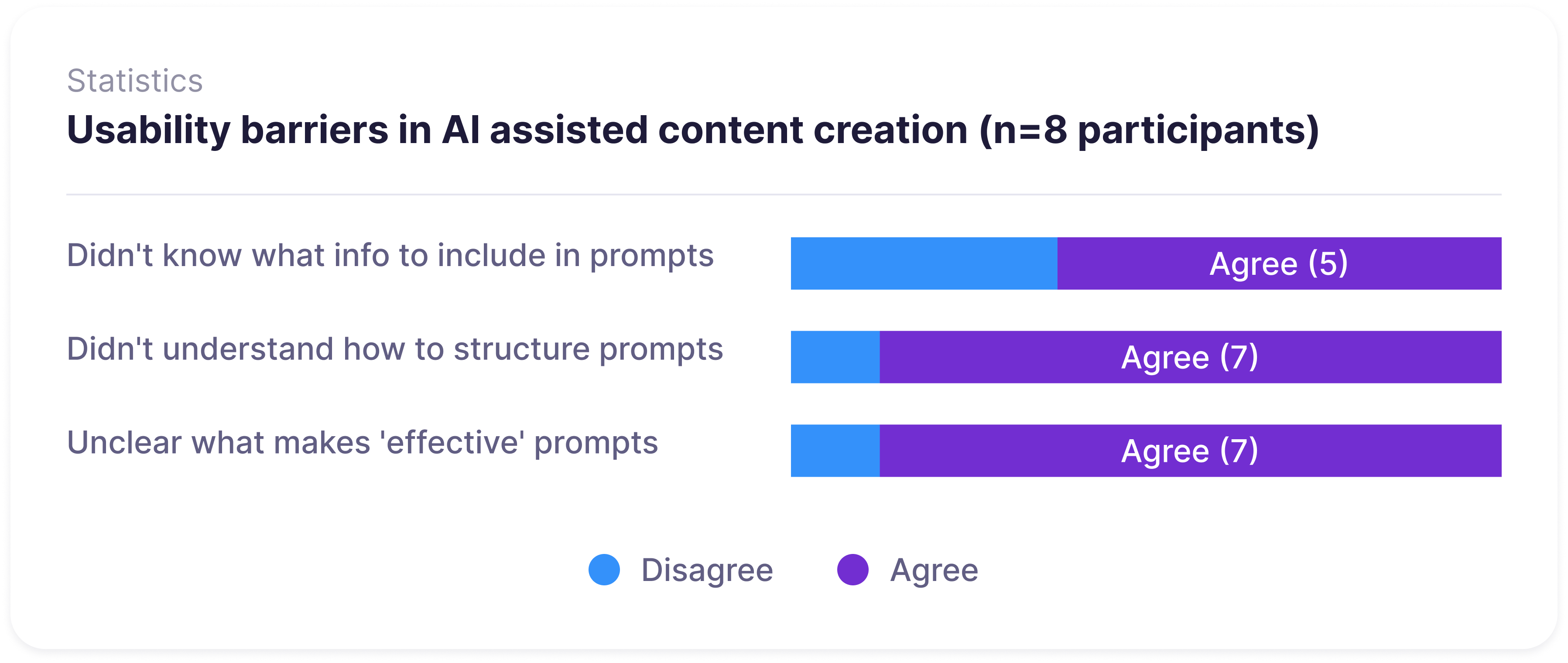

I determined to have this workshop for understanding how would users define outreach messages and how non-tech-savvy users create campaigns without support from our engineering teams.

Synthesized Insight

How make AI work for you, not against you

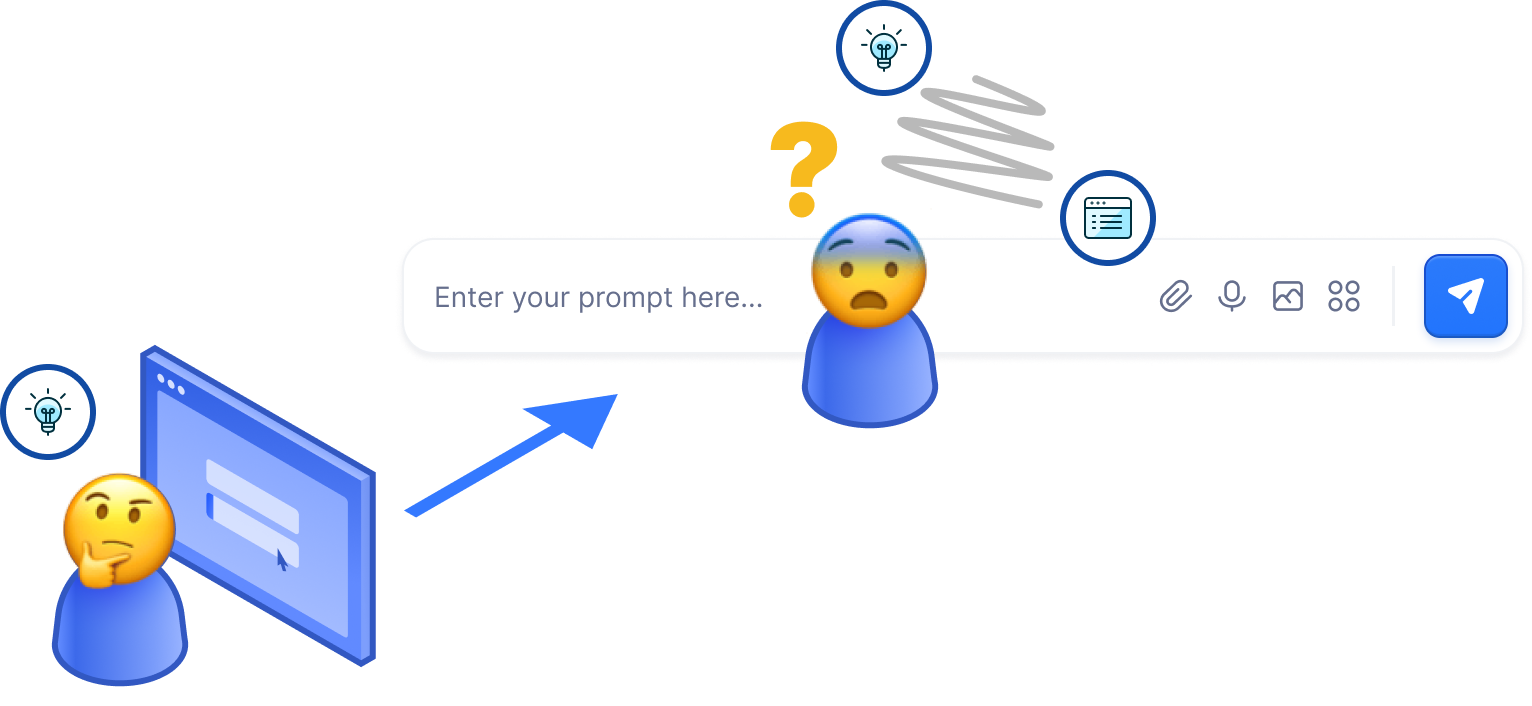

Sales reps know personalized emails drive higher engagement, but they often found themselves staring at the blank prompt field, hoping for inspiration to strike...

Frustrated and just input whatever prompt comes to mind

Inconsistent and unpredictable AI outputs

Switch between ChatGPT and Laxis to copy & paste prompts

Disrupted workflow and causing repetitive friction

Request for technical support and our engineers will jump in for help

Longer wait times as engineers were manually fixing issues

The Opportunity

How might we transform AI-generated robotic messages into humanized product pitch to drive stronger engagement?

Ideation

Information architecture

Refine the Bridge between Context and AI Prompt

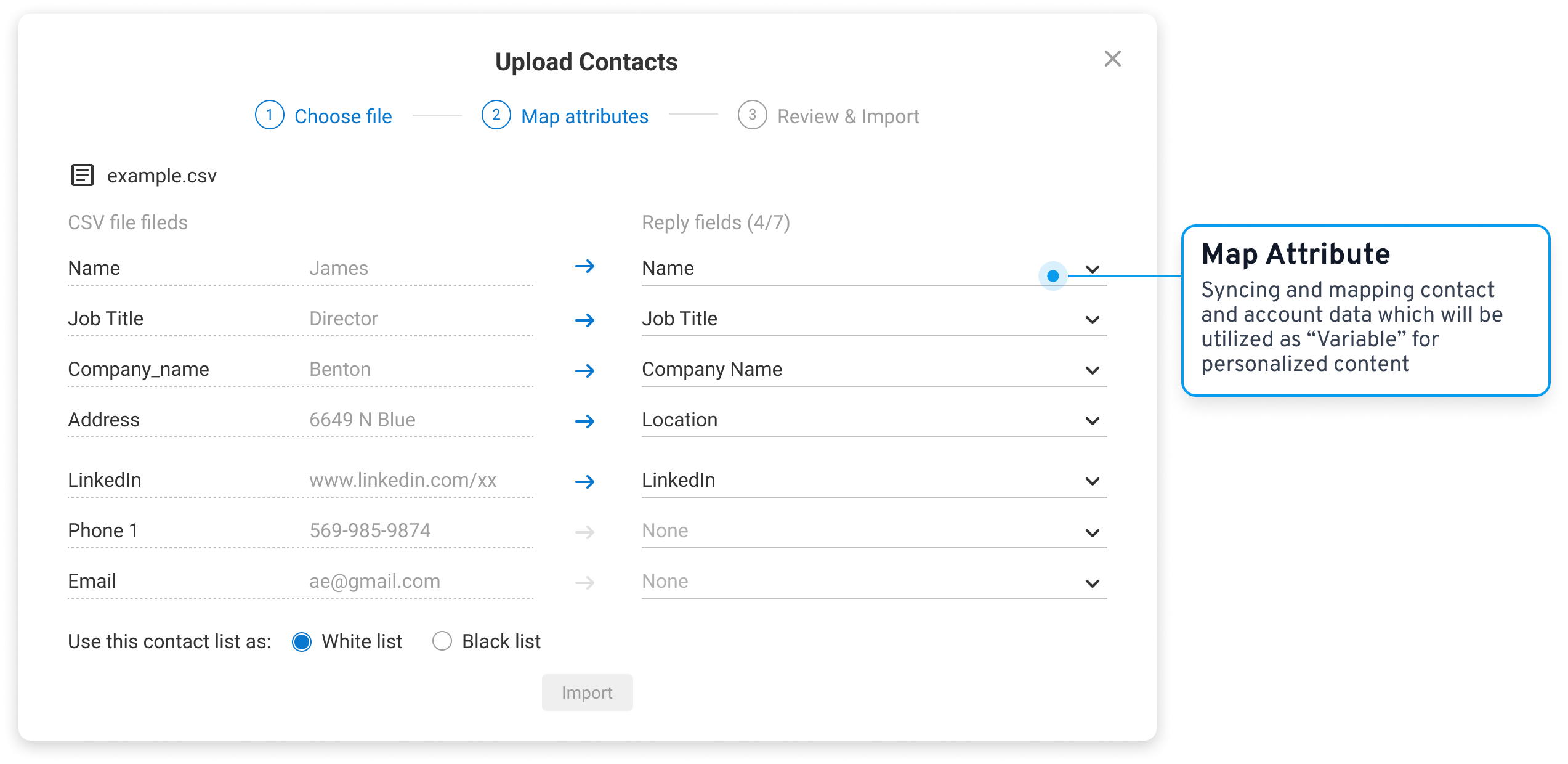

Create a workflow where profiles are unified and always syncing and dynamic tools to pull insights at render time. Layer in behavior-based rules to adjust segments and content mid-flight. This reduces manual list churn and duplicate campaigns, lowers backend stress, and drives higher relevance, replies, and wins.

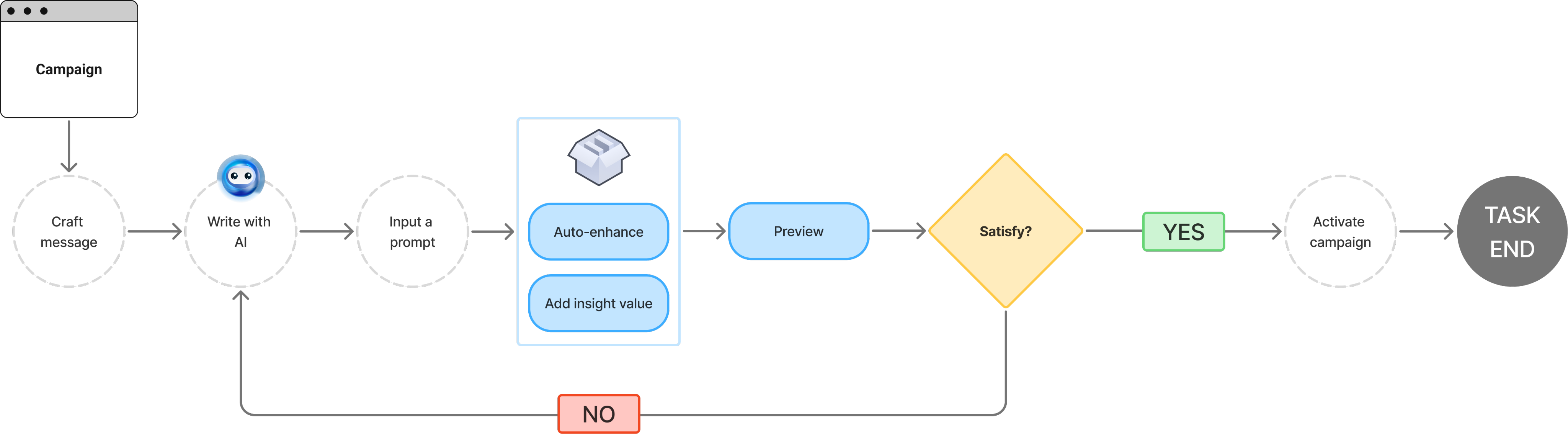

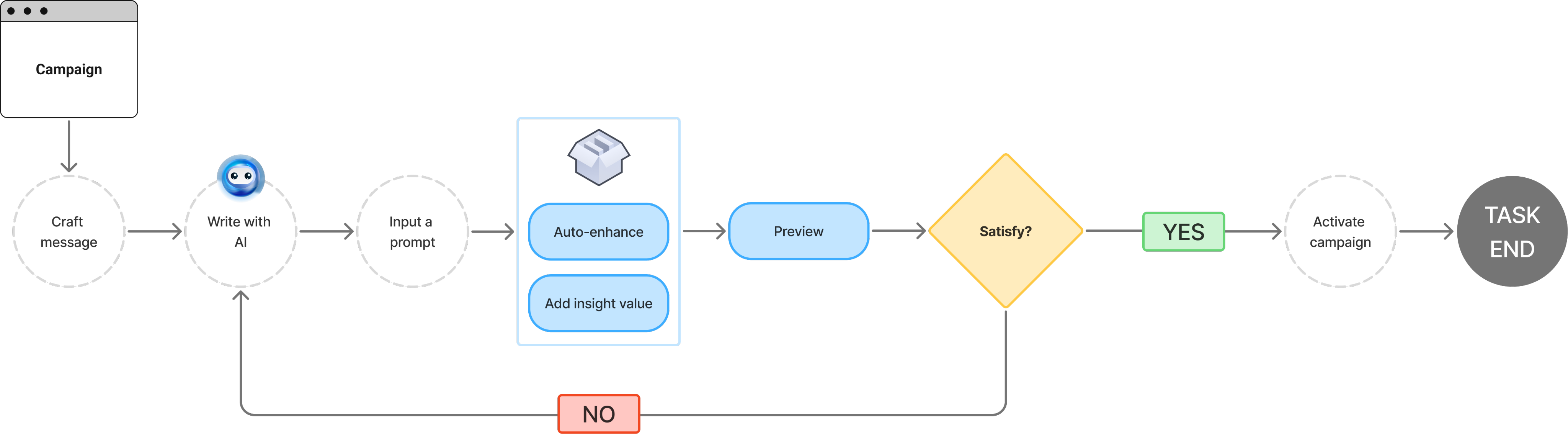

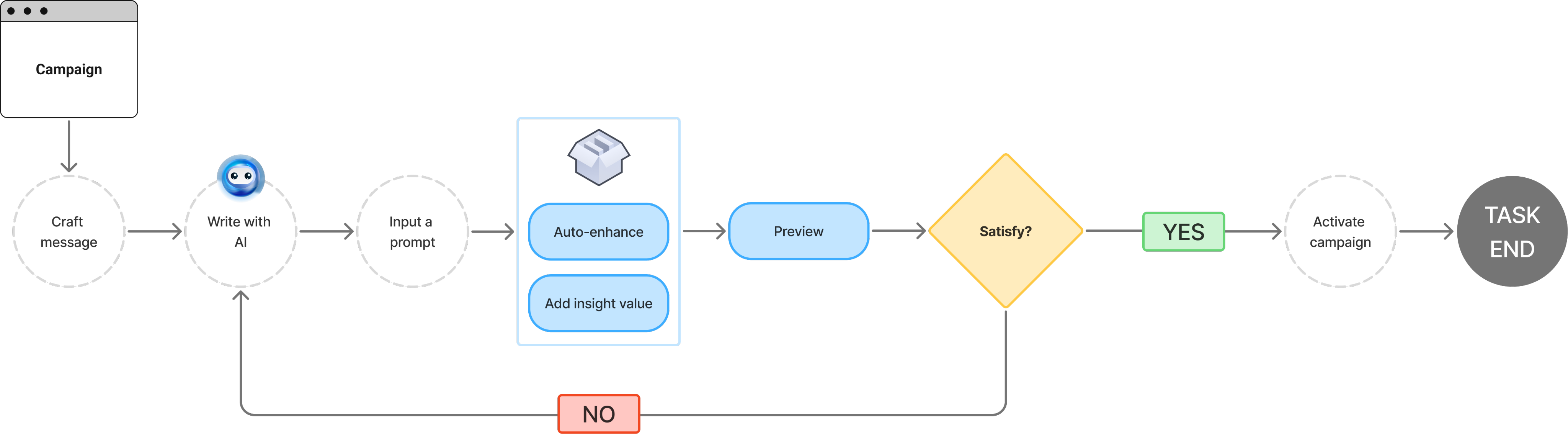

User Flow: Craft messages with AI

Wireframe

Stop Stacking Features; Shape Value Instead

The research showed the pain point around AI prompting, but rather than building another prompt assistant, I identified what users actually wanted: a solid, personalized pitch they could confidently send to their customers.

This shifted my ideation from just “adding prompt tools” to “removing noise.” The wireframes below show early explorations that prioritized outcome clarity, progressive disclosure of options, and guided content generation.

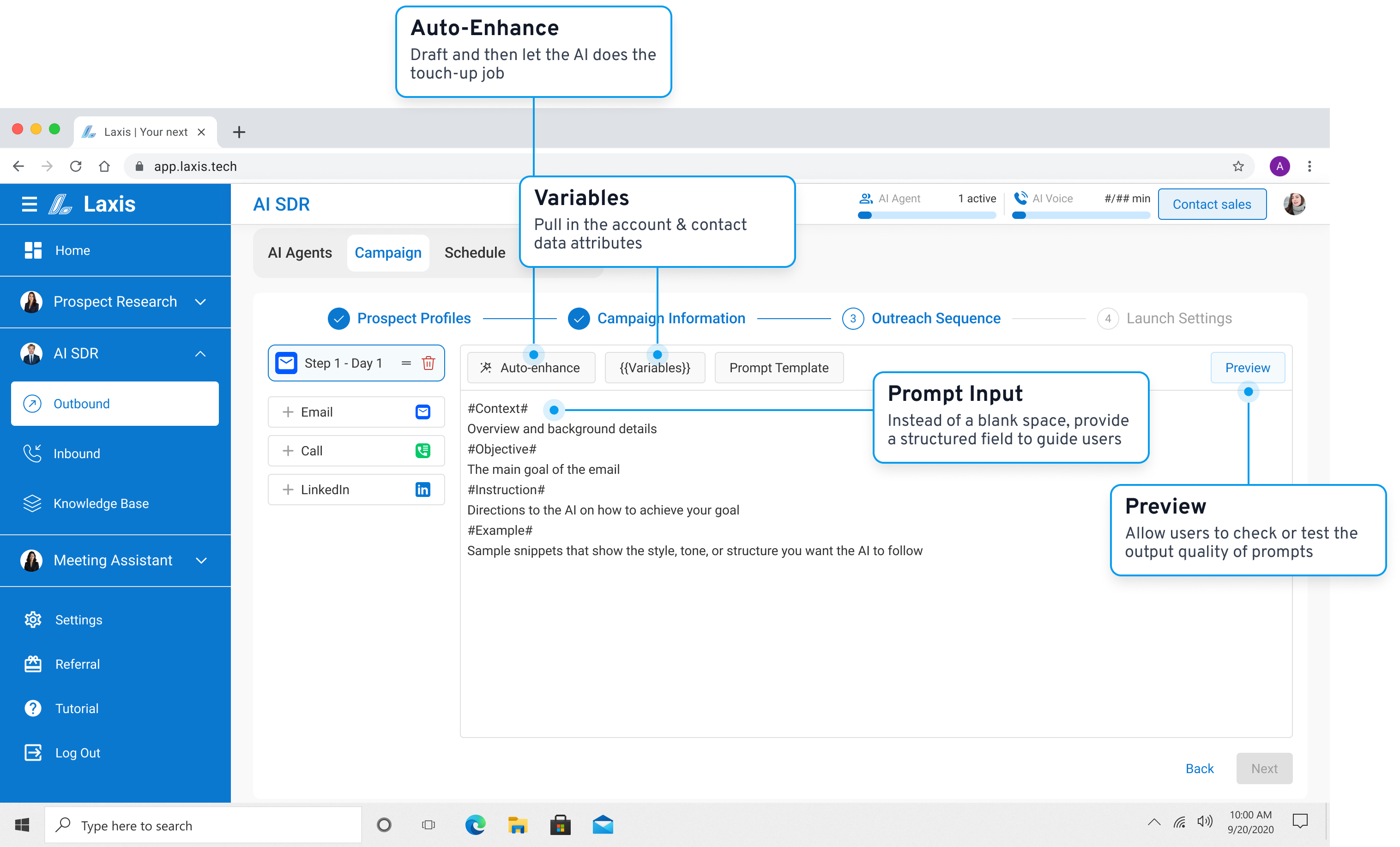

Highlight the key features that help users to craft an ideal pitch

Experiment with different layouts for best solution

Iteration

The trade-off we missed

AI generation

Faster, scalable, data-informed BUT less precise and less natural-sounding than human writing

Human writing

Slower, manual effort BUT more authentic, contextually nuanced, and trustworthy

Prototype Validation

Testing the Structured Prompt Hypothesis

We believed that providing professionally-crafted prompt templates would enable non-technical sales reps to generate send-ready emails. We conducted internal testing with our engineering team, internal sales team, and several business advisors.

Testing revealed an unexpected outcome: users didn't want AI to replace their writing access. They just wanted AI to accelerate and enhance their writing.

Prototype Testing

AI Should Work Alongside, Not Instead of User

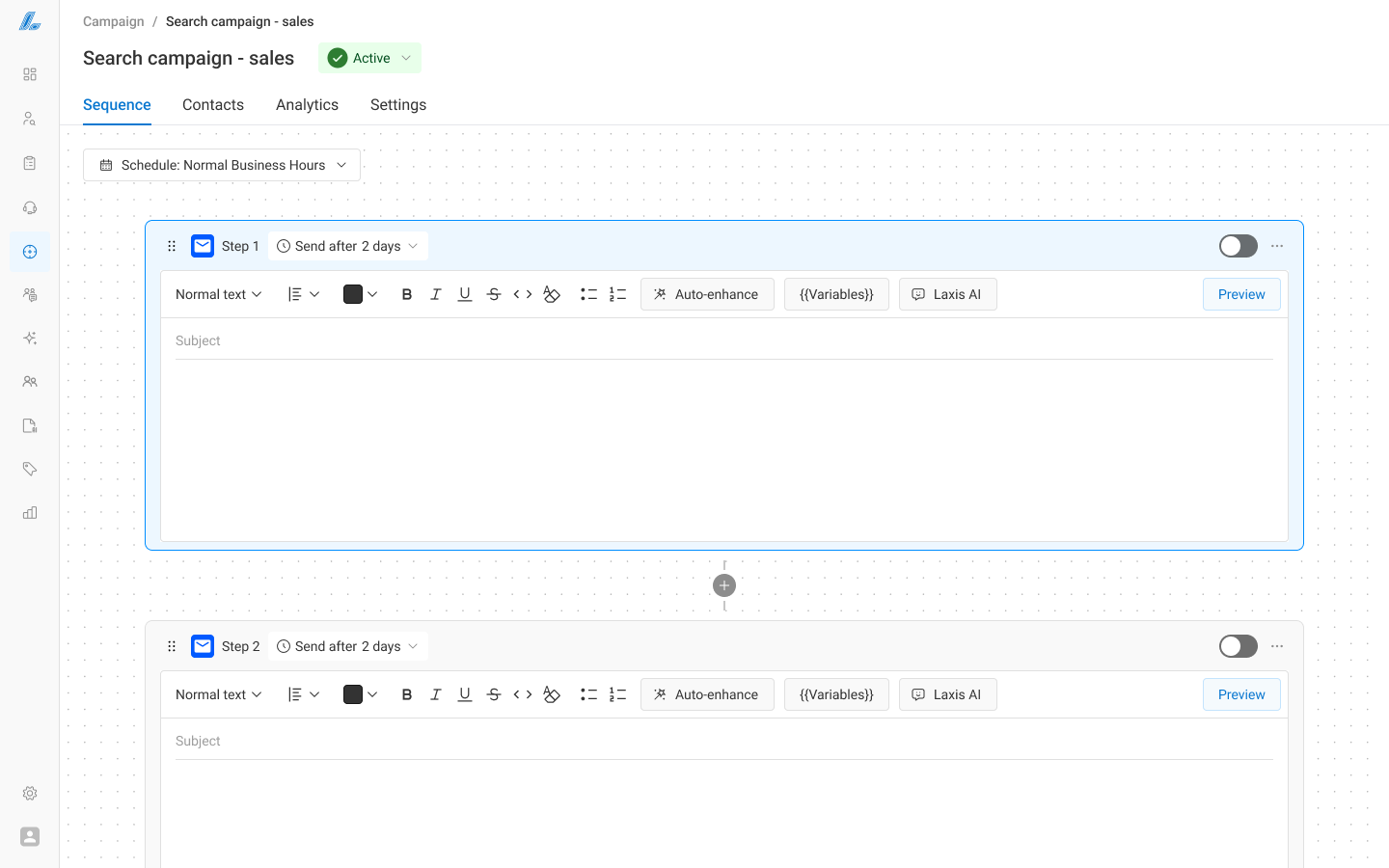

Added a traditional Email input field alongside AI generation, creating two complementary workflows:

- AI-first: Generate draft from prompts → edit manually

- Human-first: Write draft manually → enhance with AI personalization

AI provides research context and personalization at scale, and users still have direct control for authenticity.

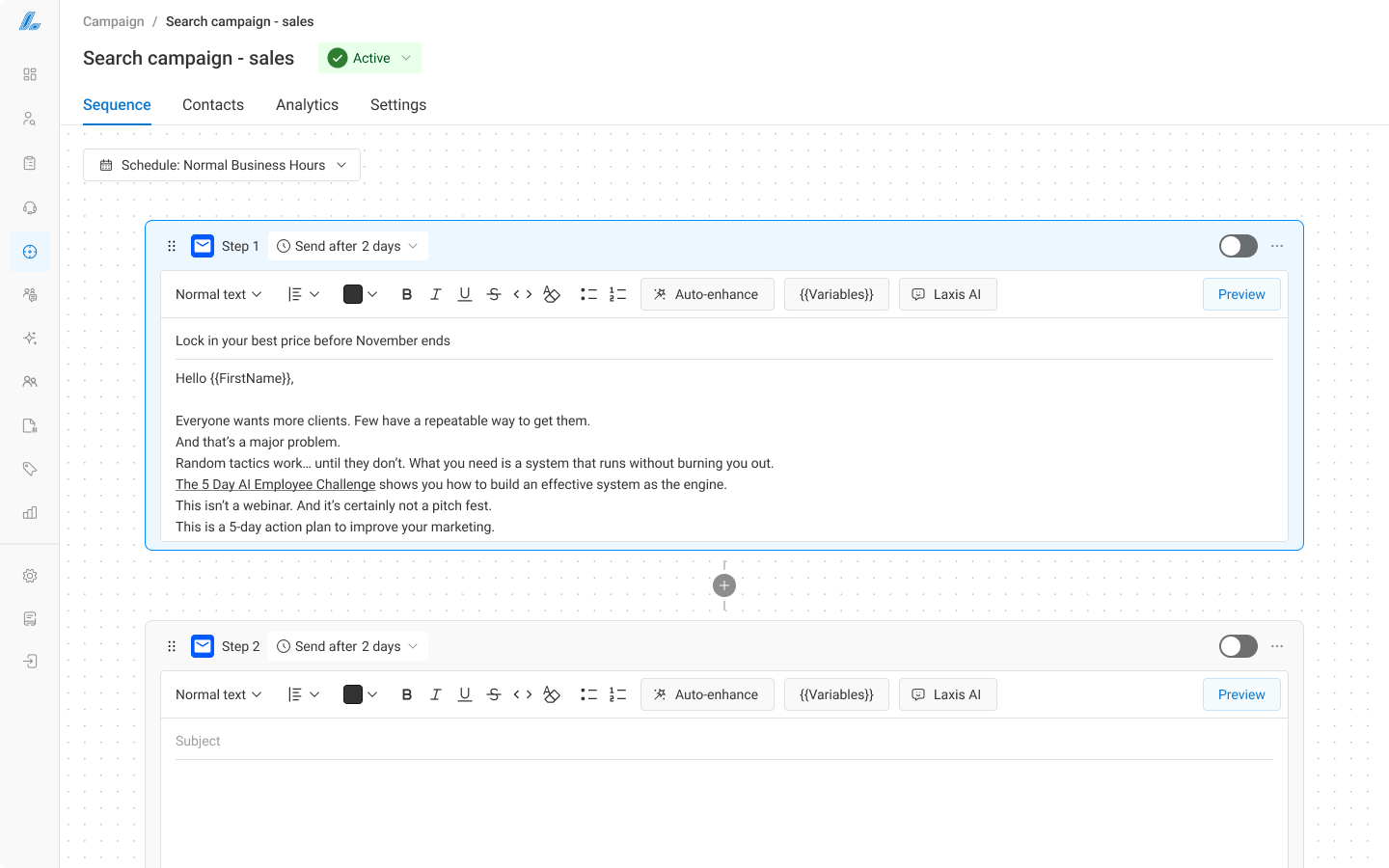

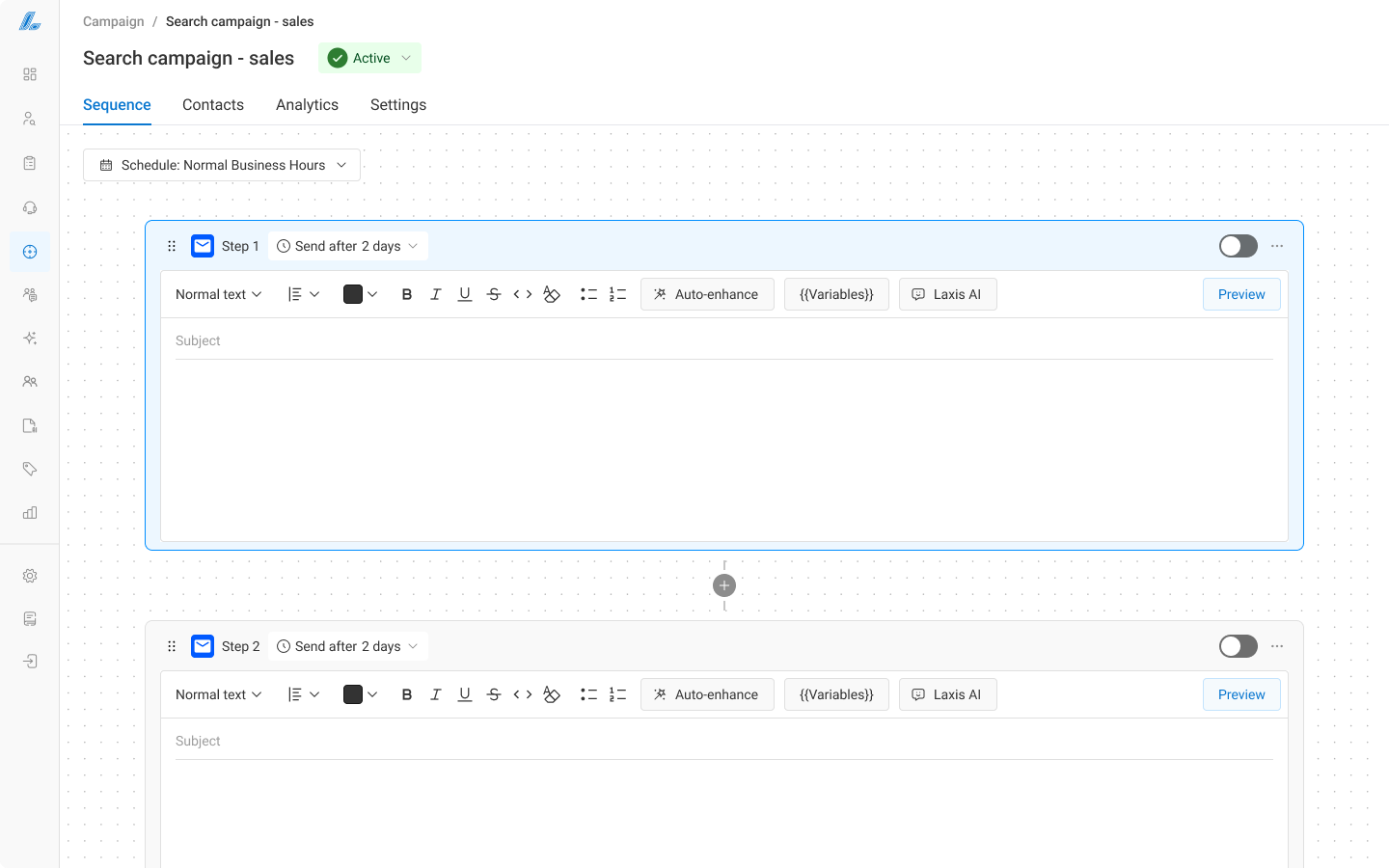

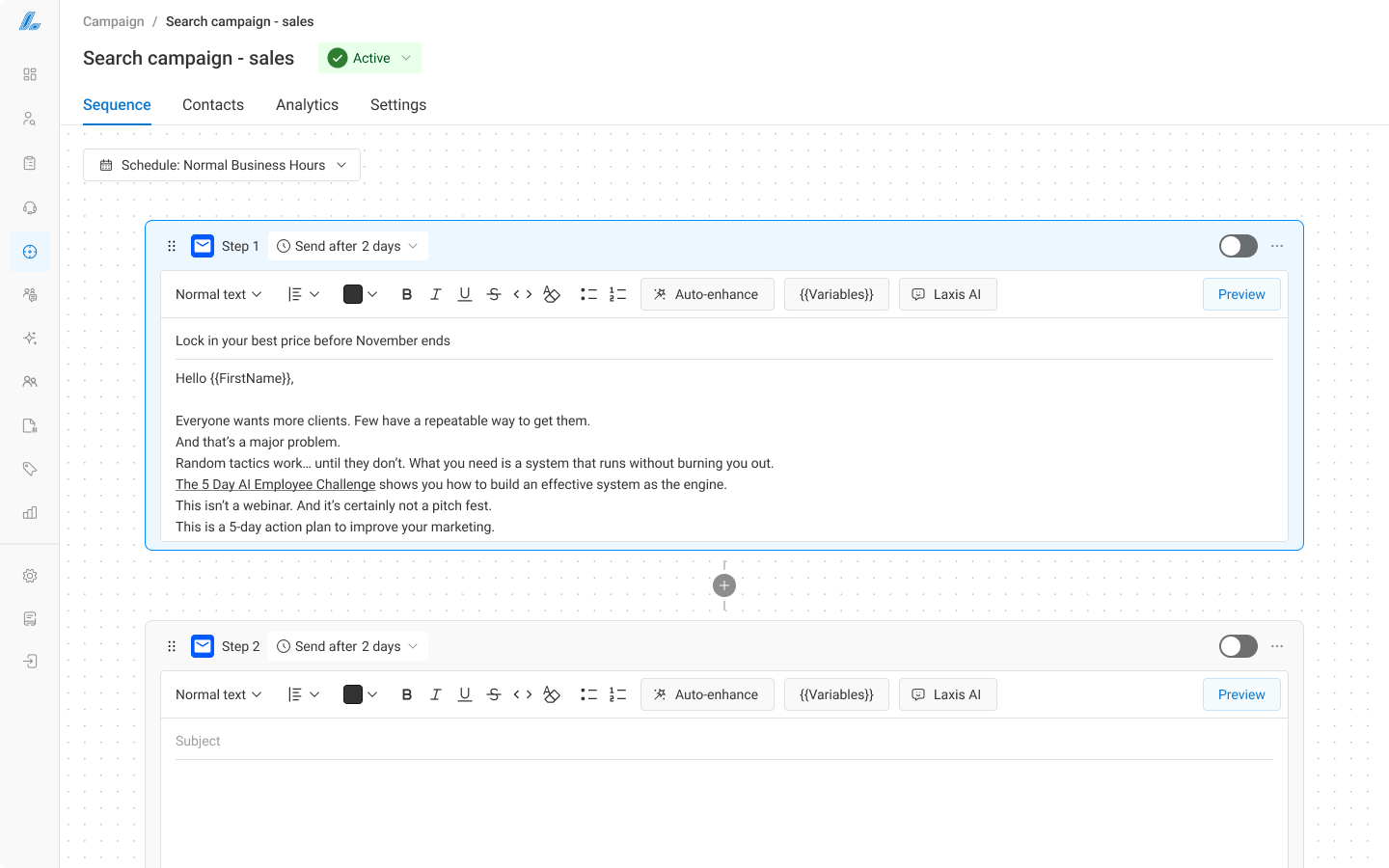

Updated and Finalized UI

Results

More Than an AI Content Writer

Laxis will start with the user’s draft and enhances it by automatically adding relevant, personalized details — like addressing what the recipient cares about. Users are free to edit and customize the result to go deeper. This approach led to a 10% increase in reply rates and gave users more confidence in the quality of their outreach.

Laxis - Prospect Research

Overview

01

Discovery

02

Ideation

03

Iteration

04

Impact

05

Overview

Connecting Insights to Action: Designing Personalized Outreach at Scale

Crafting an effective sales pitch often requires hours of prep work to pulling research insights into the content. To help users spend less time preparing, and more time on connecting with customers, our team decided to refine the experience of creating content for the outreach campaign.

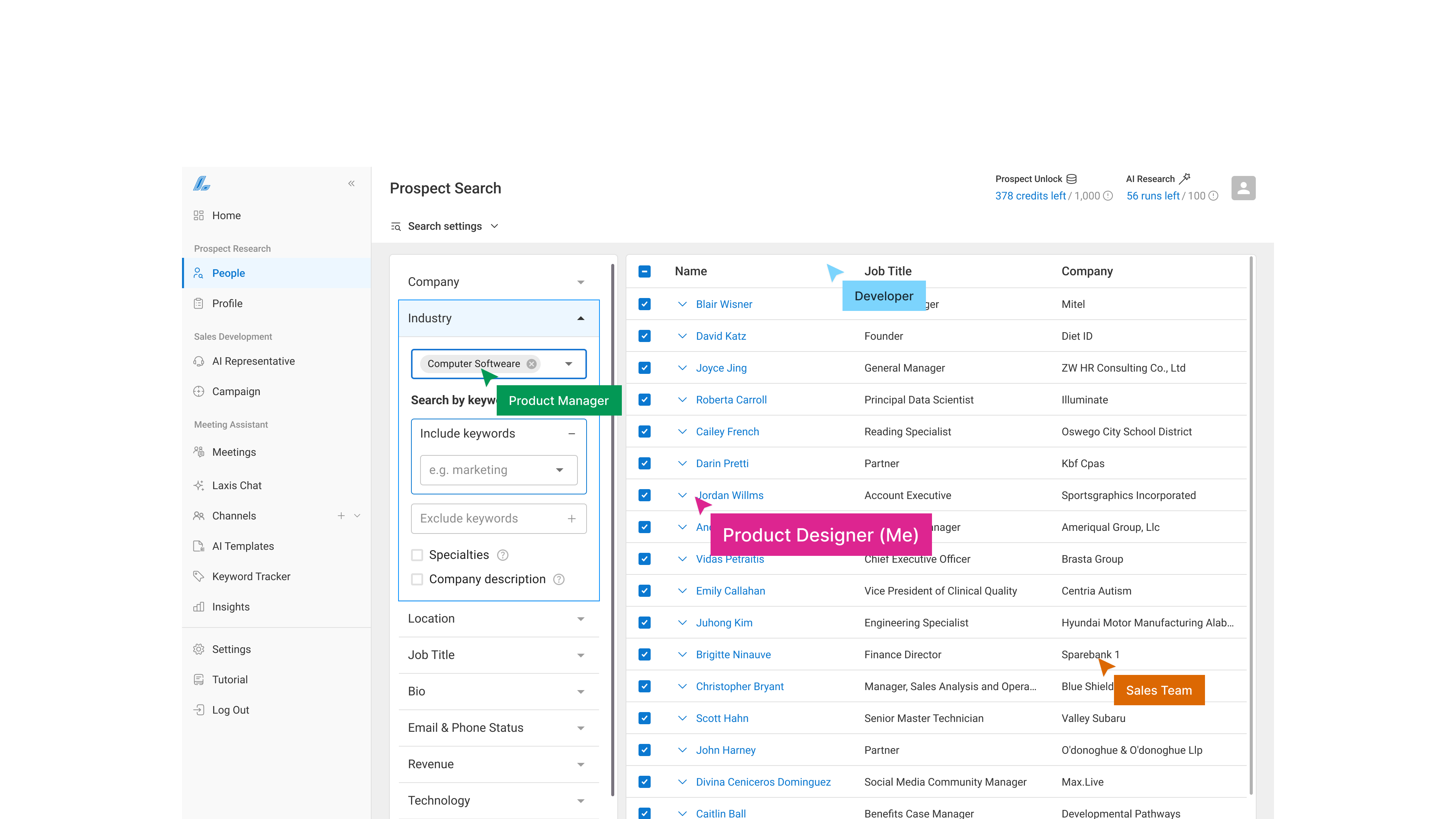

Team

Product Designer (Me)

Front & Backend Engineers

Product Manager

Sales

MVP of Prospect Research AI Agent released

2025-06

Unlock the Power of Intelligent Customer Research from 700M+ B2B Database

View Project

This case showcases how I refined the experience that enables users to apply the research insights directly into their message drafts — turning ideas into persuasive pitch.

Launch

2025-10

Improved Performance

>10%

Outreach reply rates

Consistent Deals

30%

Positive response rates

The Context

Why the Cold Email Didn’t Work

Laxis helps users save time by drafting and sending sales emails automatically. However, without the right context, many AI-written messages can feel impersonal, generic or irrelevant. It didn’t convince audiences why should they care.

Across 28M+ emails, it takes roughly 344 messages to earn a single meeting—just 3 wins for every 1000 attempts.

The Challenge

How might we transform AI-generated generic messages into

personalized product pitch driving stronger engagement?

Discovery

Comparative Message Analysis (Content Audit)

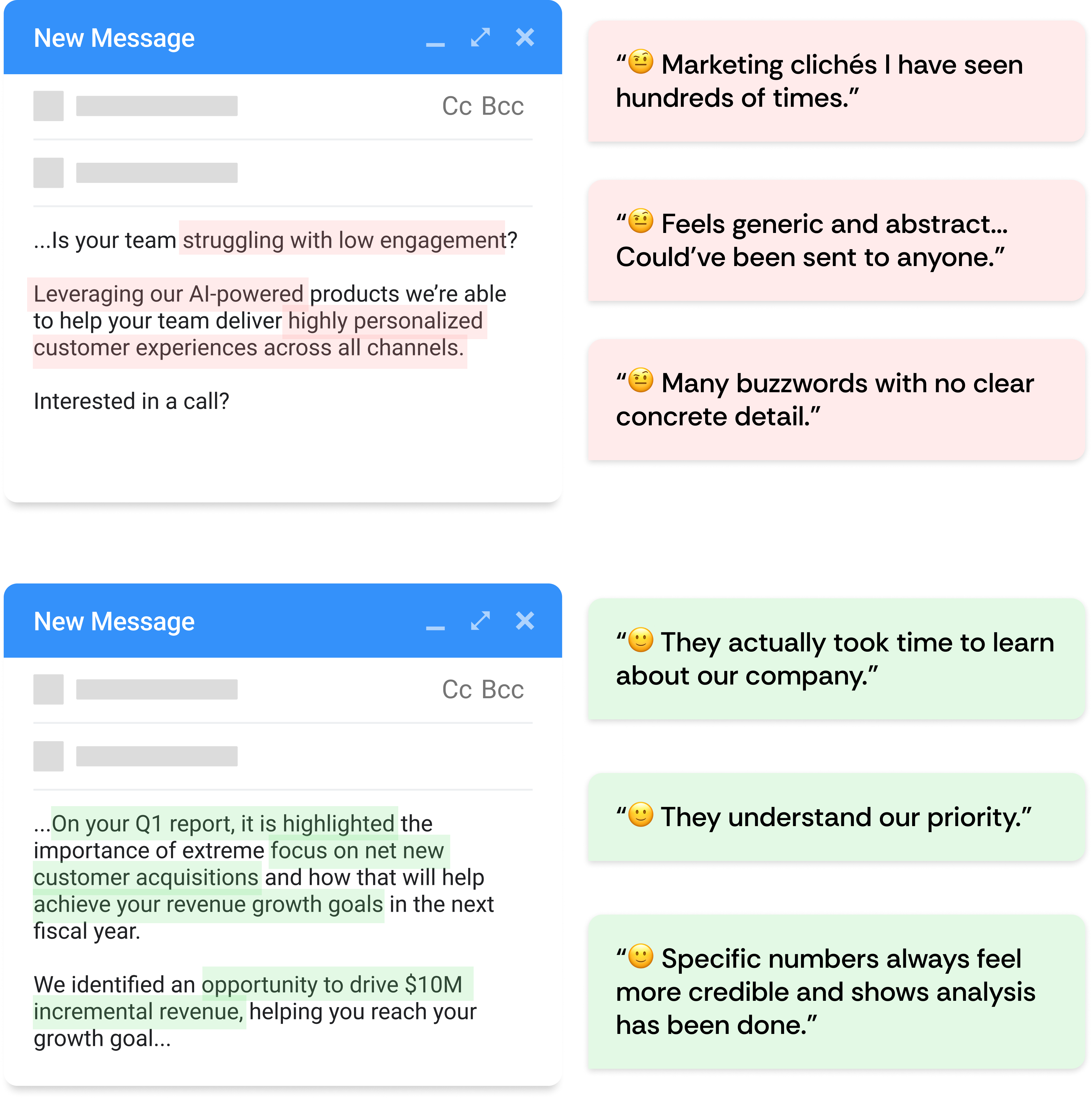

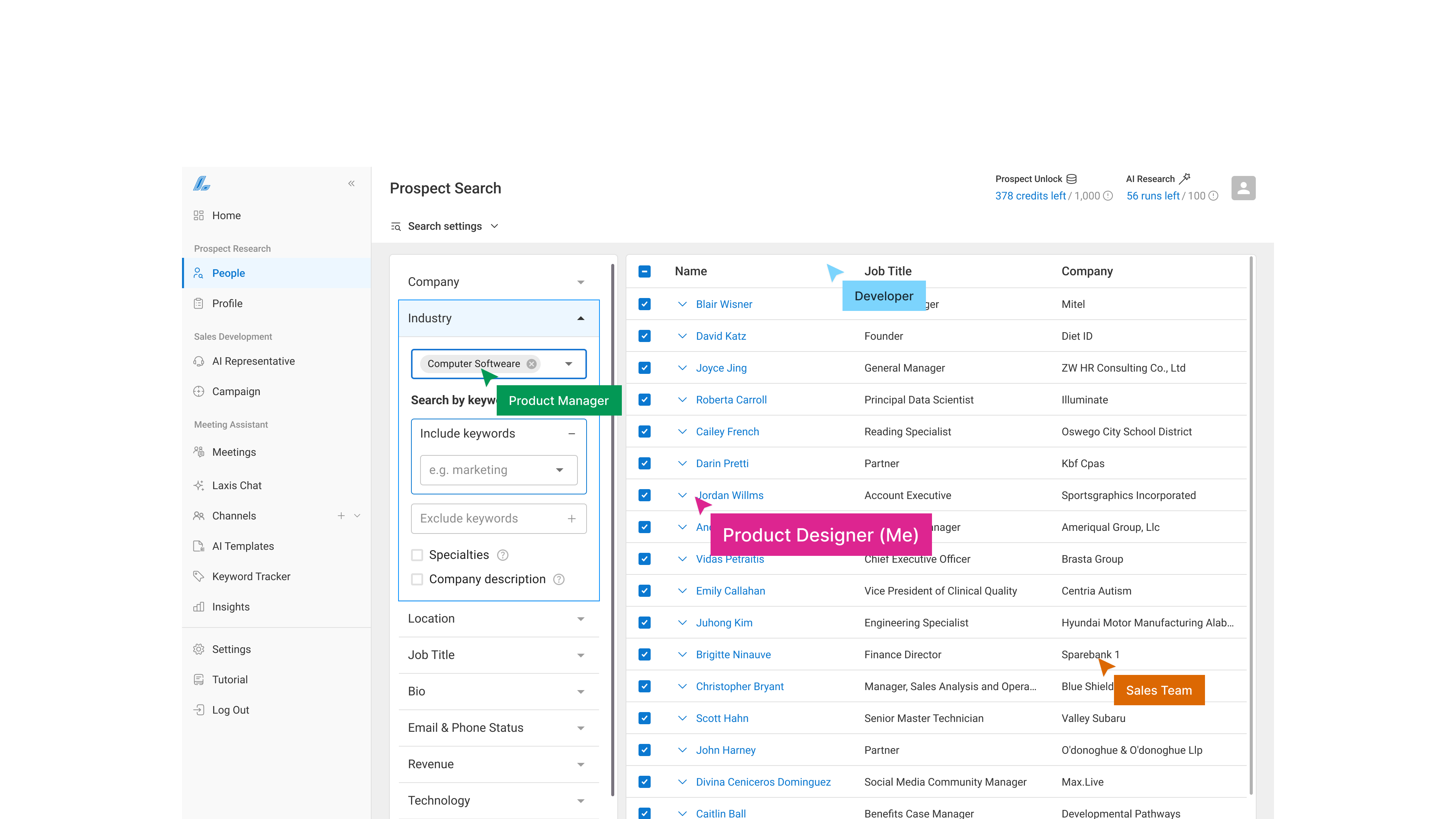

What Good Personalization Looks Like

To define what effective personalization means for our users, I conducted a Comparative Message Analysis—testing two versions of the same outreach email sent to C-level executives.

Task Analysis

Disconnected Workflows, Manual Chaos

I determined to have this workshop for understanding how would users define outreach messages and how non-tech-savvy users create campaigns without support from our engineering teams.

Synthesized Insight

How make AI work for you, not against you

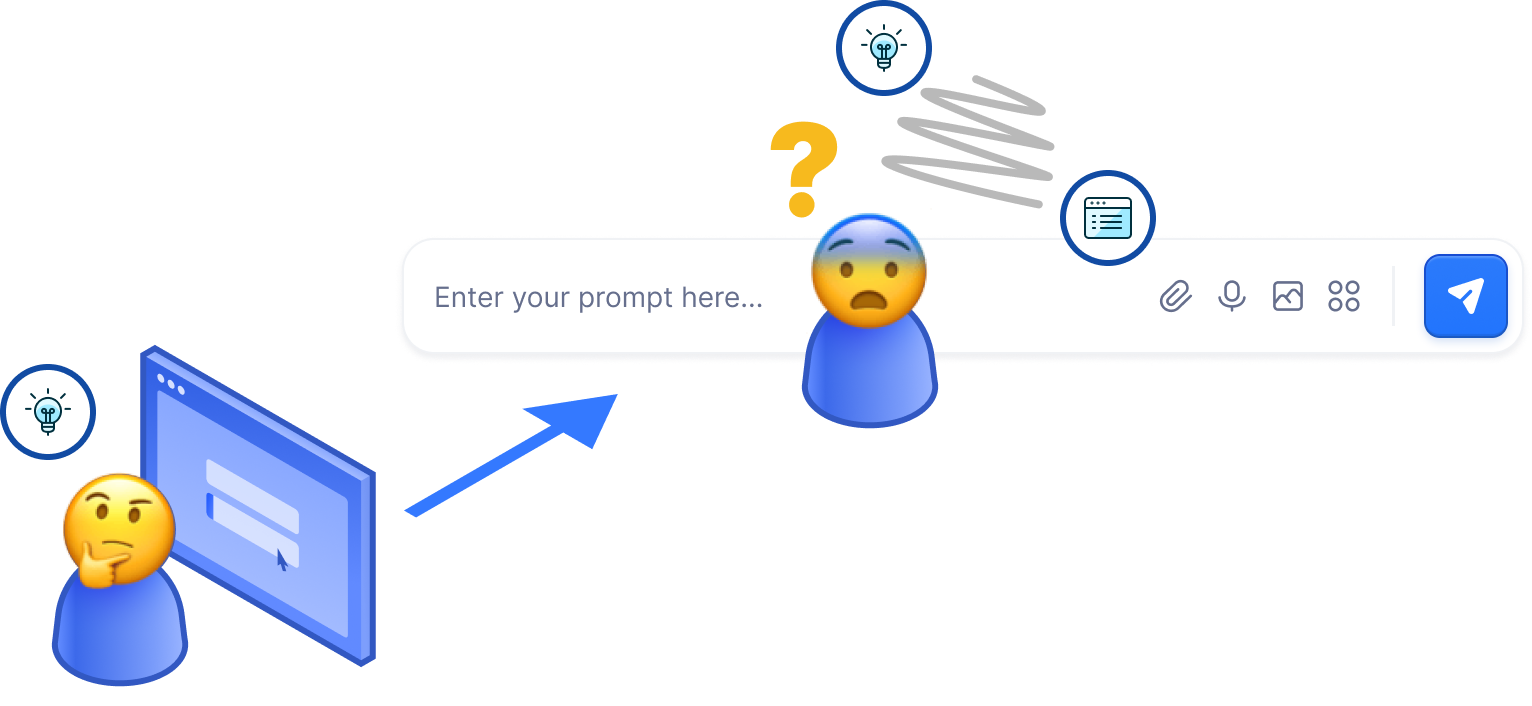

Sales reps know personalized emails drive higher engagement, but they often found themselves staring at the blank prompt field, hoping for inspiration to strike...

Frustrated and just input whatever prompt comes to mind

Inconsistent and unpredictable AI outputs

Switch between ChatGPT and Laxis to copy & paste prompts

Disrupted workflow and causing repetitive friction

Request for technical support and our engineers will jump in for help

Longer wait times as engineers were manually fixing issues

The Opportunity

How might we transform AI-generated robotic messages into humanized product pitch to drive stronger engagement?

Ideation

Information architecture

Refine the Bridge between Context and AI Prompt

Create a workflow where profiles are unified and always syncing and dynamic tools to pull insights at render time. Layer in behavior-based rules to adjust segments and content mid-flight. This reduces manual list churn and duplicate campaigns, lowers backend stress, and drives higher relevance, replies, and wins.

User Flow: Craft messages with AI

Wireframe

Stop Stacking Features; Shape Value Instead

The research showed the pain point around AI prompting, but rather than building another prompt assistant, I identified what users actually wanted: a solid, personalized pitch they could confidently send to their customers.

This shifted my ideation from just “adding prompt tools” to “removing noise.” The wireframes below show early explorations that prioritized outcome clarity, progressive disclosure of options, and guided content generation.

Highlight the key features that help users to craft an ideal pitch

Experiment with different layouts for best solution

Iteration

The trade-off we missed

AI generation

Faster, scalable, data-informed BUT less precise and less natural-sounding than human writing

Human writing

Slower, manual effort BUT more authentic, contextually nuanced, and trustworthy

Prototype Validation

Testing the Structured Prompt Hypothesis

We believed that providing professionally-crafted prompt templates would enable non-technical sales reps to generate send-ready emails. We conducted internal testing with our engineering team, internal sales team, and several business advisors.

Testing revealed an unexpected outcome: users didn't want AI to replace their writing access. They just wanted AI to accelerate and enhance their writing.

Prototype Testing

AI Should Work Alongside, Not Instead of User

Added a traditional Email input field alongside AI generation, creating two complementary workflows:

- AI-first: Generate draft from prompts → edit manually

- Human-first: Write draft manually → enhance with AI personalization

AI provides research context and personalization at scale, and users still have direct control for authenticity.

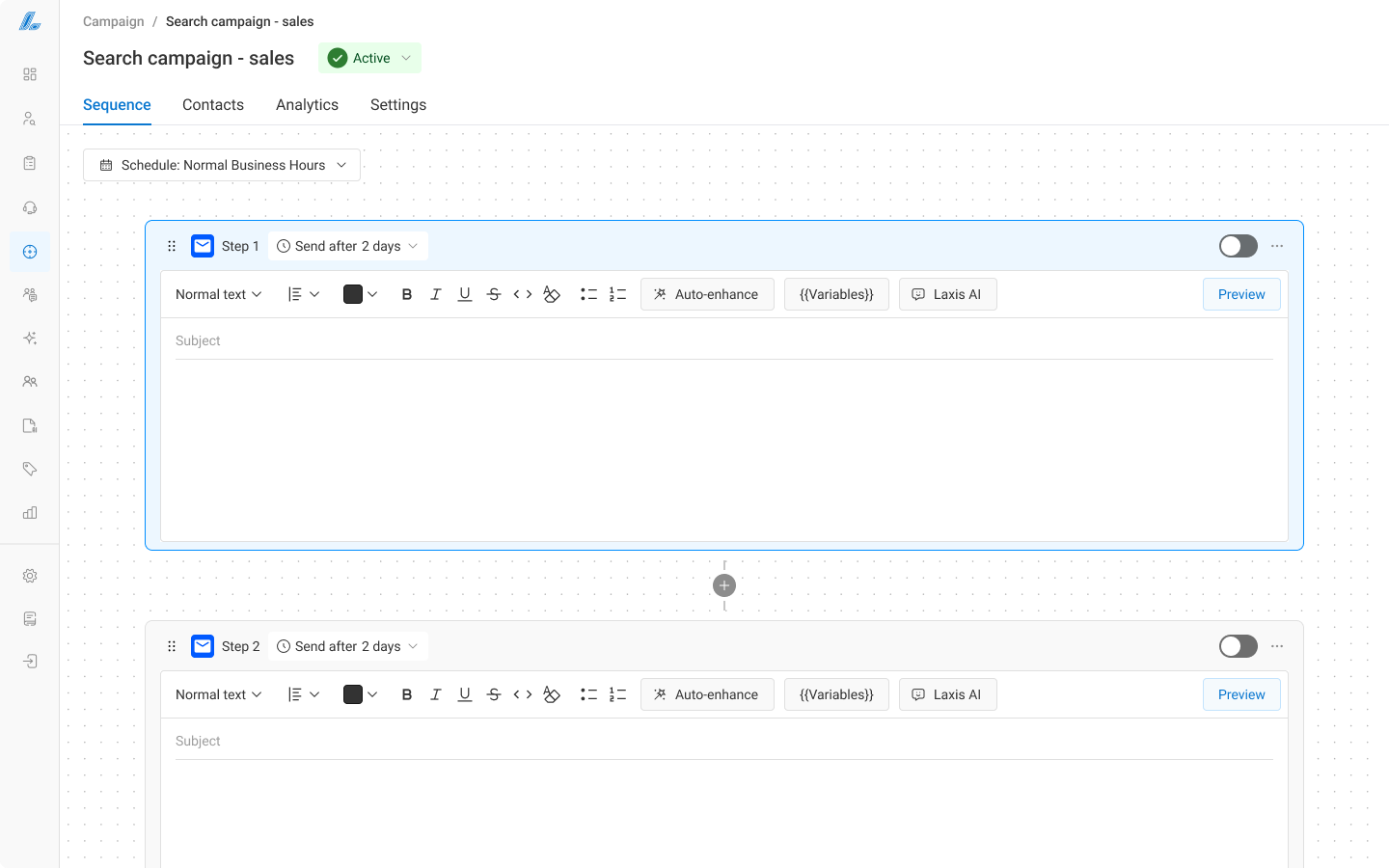

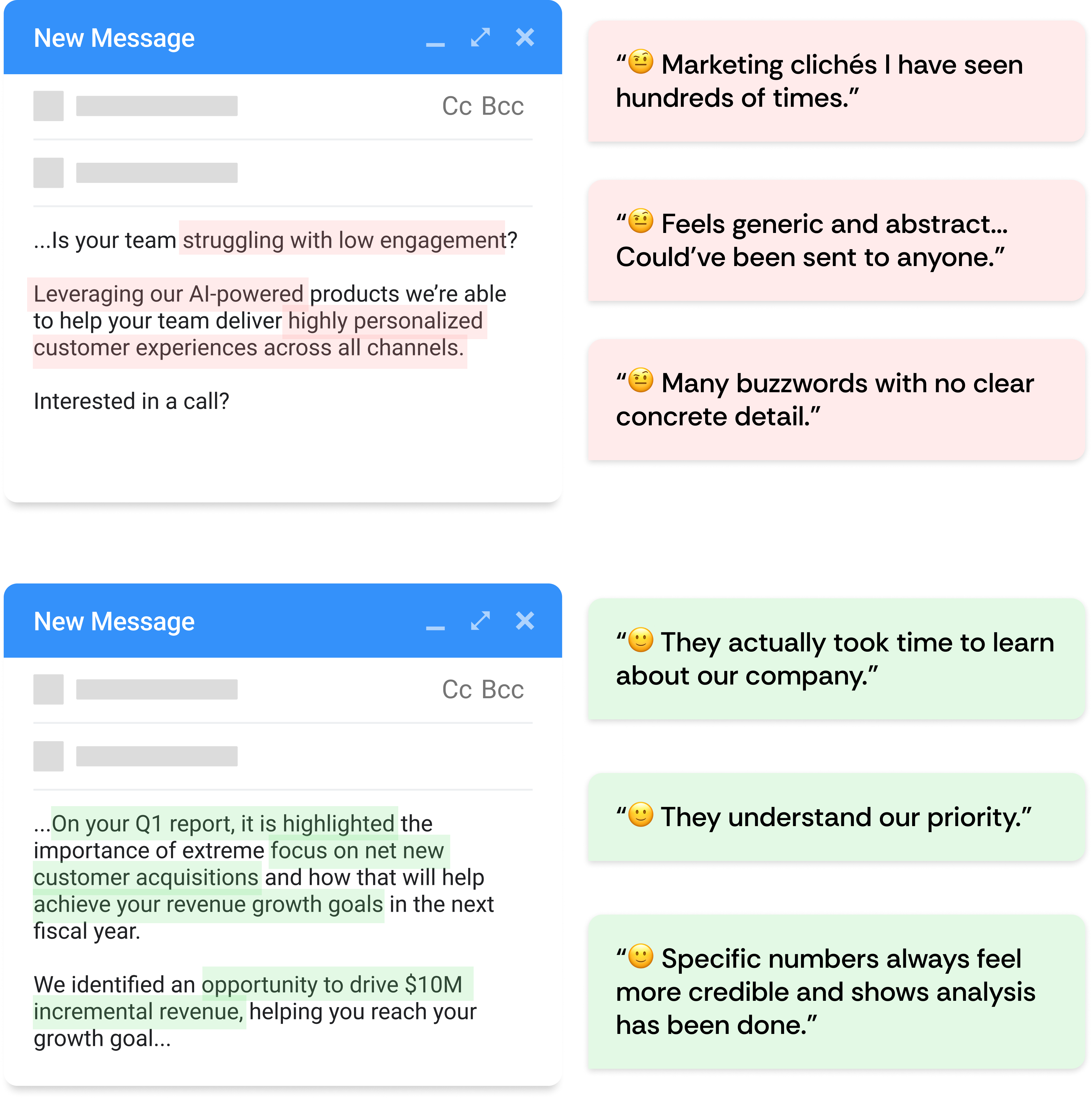

Updated and Finalized UI

Results

More Than an AI Content Writer

Laxis will start with the user’s draft and enhances it by automatically adding relevant, personalized details — like addressing what the recipient cares about. Users are free to edit and customize the result to go deeper. This approach led to a 10% increase in reply rates and gave users more confidence in the quality of their outreach.

Overview

Connecting Insights to Action: Designing Personalized Outreach at Scale

Crafting an effective sales pitch often requires hours of prep work to pulling research insights into the content. To help users spend less time preparing, and more time on connecting with customers, our team decided to refine the experience of creating content for the outreach campaign.

Team

Product Designer (Me)

Front & Backend Engineers

Product Manager

Sales

MVP of Prospect Research AI Agent released

2025-06

Unlock the Power of Intelligent Customer Research from 700M+ B2B Database

View Project

This case showcases how I refined the experience that enables users to apply the research insights directly into their message drafts — turning ideas into persuasive pitch.

Launch

2025-10

Improved Performance

>10%

Outreach reply rates

Consistent Deals

30%

Positive response rates

The Context

Why the Cold Email Didn’t Work

Laxis helps users save time by drafting and sending sales emails automatically. However, without the right context, many AI-written messages can feel impersonal, generic or irrelevant. It didn’t convince audiences why should they care.

Across 28M+ emails, it takes roughly 344 messages to earn a single meeting—just 3 wins for every 1000 attempts.

The Challenge

How might we transform AI-generated generic messages into

personalized product pitch driving stronger engagement?

Discovery

Comparative Message Analysis (Content Audit)

What Good Personalization Looks Like

This analysis revealed that personalization is not about just inserting a name. It’s about showing understanding of an audience’s context and needs. These insights guided how I should design a flow that connects the research information with Generative AI to optimize personalized content.

Co-Creation Workshops

Find What Went Wrong in Current User Flow

I determined to have this workshop for understanding how would users define outreach messages and how non-tech-savvy users create campaigns without support from our engineering teams.

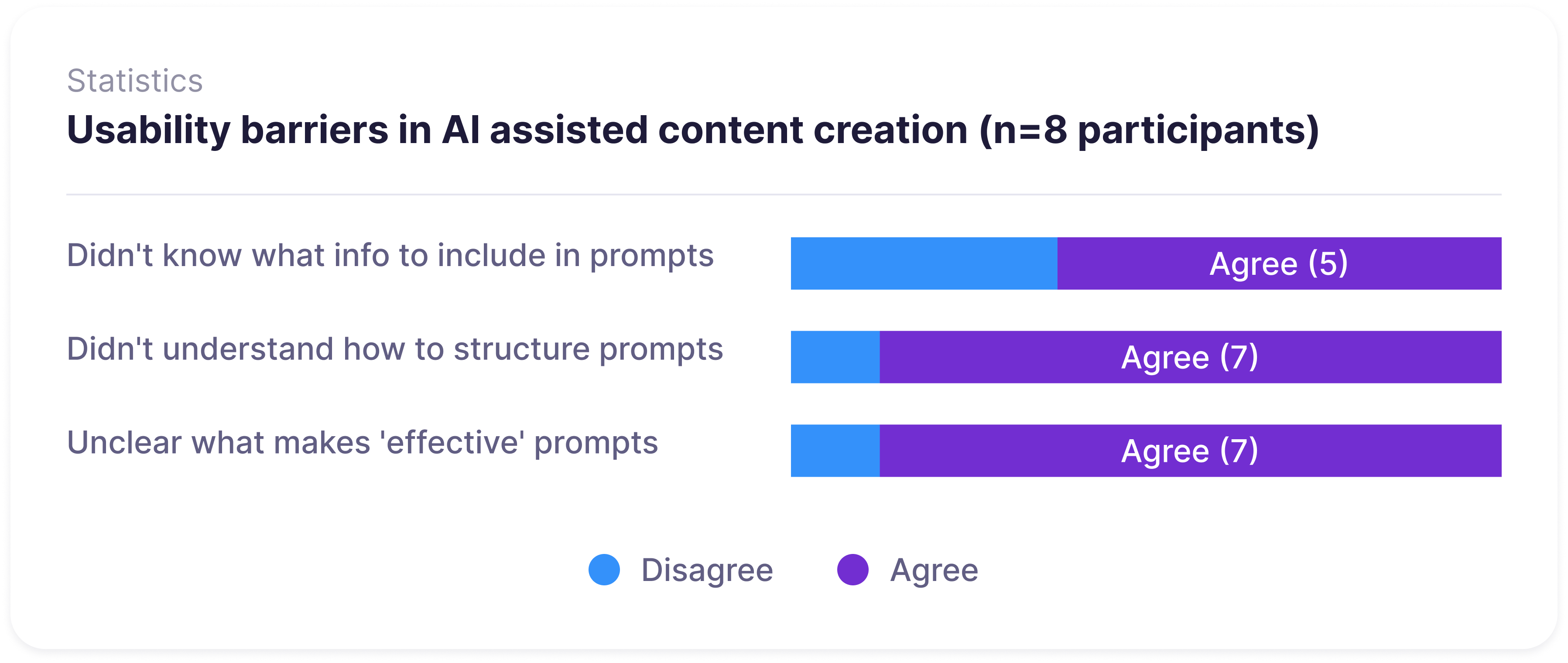

Patterns & Insights

No Context + Vague Prompts = Generic Output

Sales reps know personalized emails drive higher engagement, but they often found themselves staring at the blank prompt field. They don’t know how to instruct the AI to do what they want it to do.

Frustrated and just input whatever prompt comes to mind

Inconsistent and unpredictable AI outputs

Switch between ChatGPT and Laxis to copy & paste prompts

Disrupted workflow and causing repetitive friction

Request for technical support and our engineers will jump in for help

Longer wait times as engineers were manually fixing issues

The Opportunity

AI only knows what you tell it – The more context, the better the output. A well-crafted prompt saves time, money, and frustration.

Ideation

Information architecture

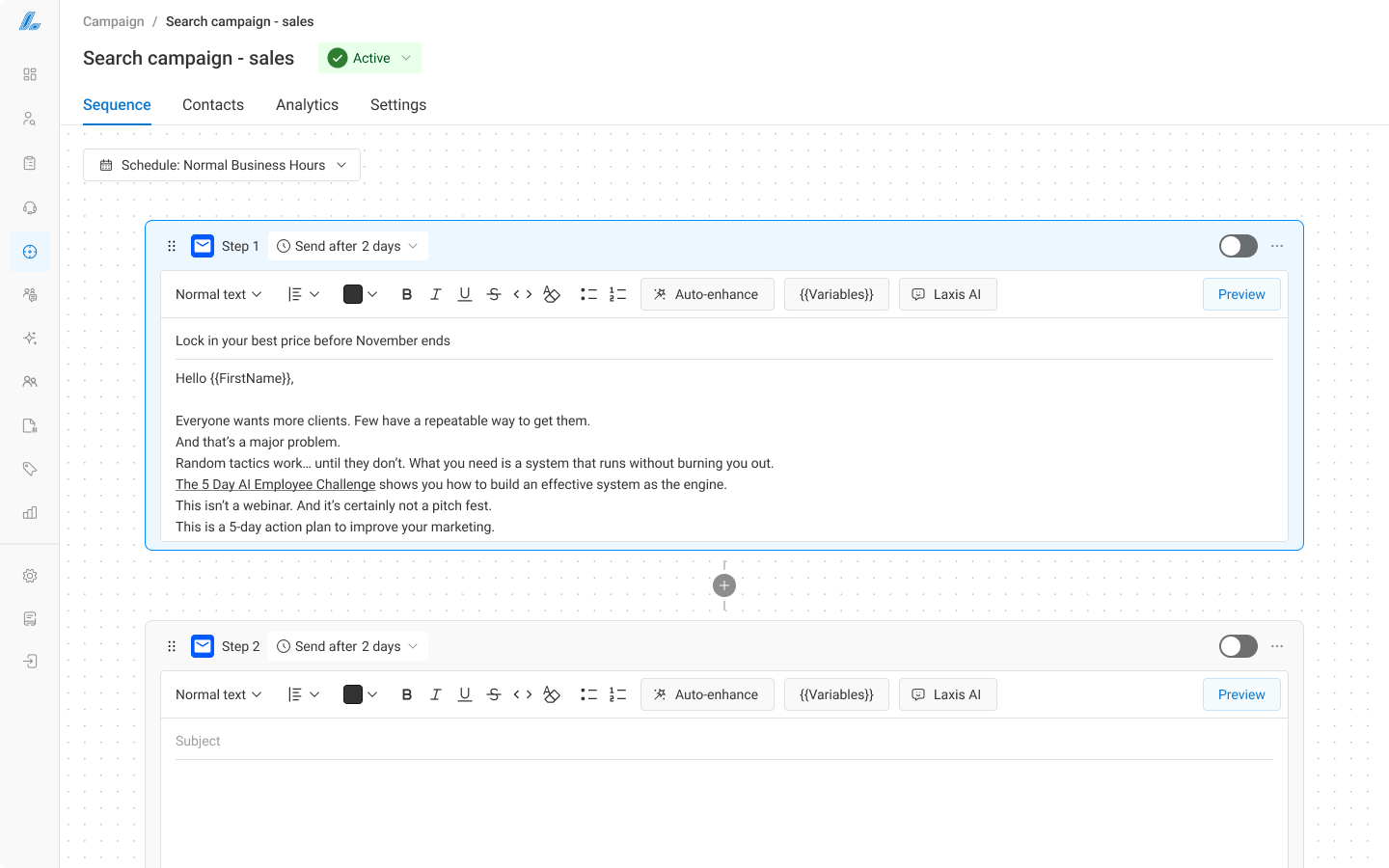

Refine the Bridge between Context and AI Prompt

Create a workflow where profiles are unified and always syncing and dynamic tools to pull insights at render time. Layer in behavior-based rules to adjust segments and content mid-flight. This reduces manual list churn and duplicate campaigns, lowers backend stress, and drives higher relevance, replies, and wins.

User Flow: Craft messages with AI

Wireframe

Focusing on Key Value — Because Less Is Always More

The research showed the pain point around AI prompting, but rather than building another prompt assistant, I identified what users actually wanted: a solid, personalized pitch they could confidently send to their customers.

This shifted my ideation from just “adding more tools” to “removing noise.” The wireframes below show early explorations that prioritized outcome clarity, progressive disclosure of options, and guided content creation.

Highlight the key features that help users to craft an ideal pitch

Experiment with different layouts for best solution

Iteration

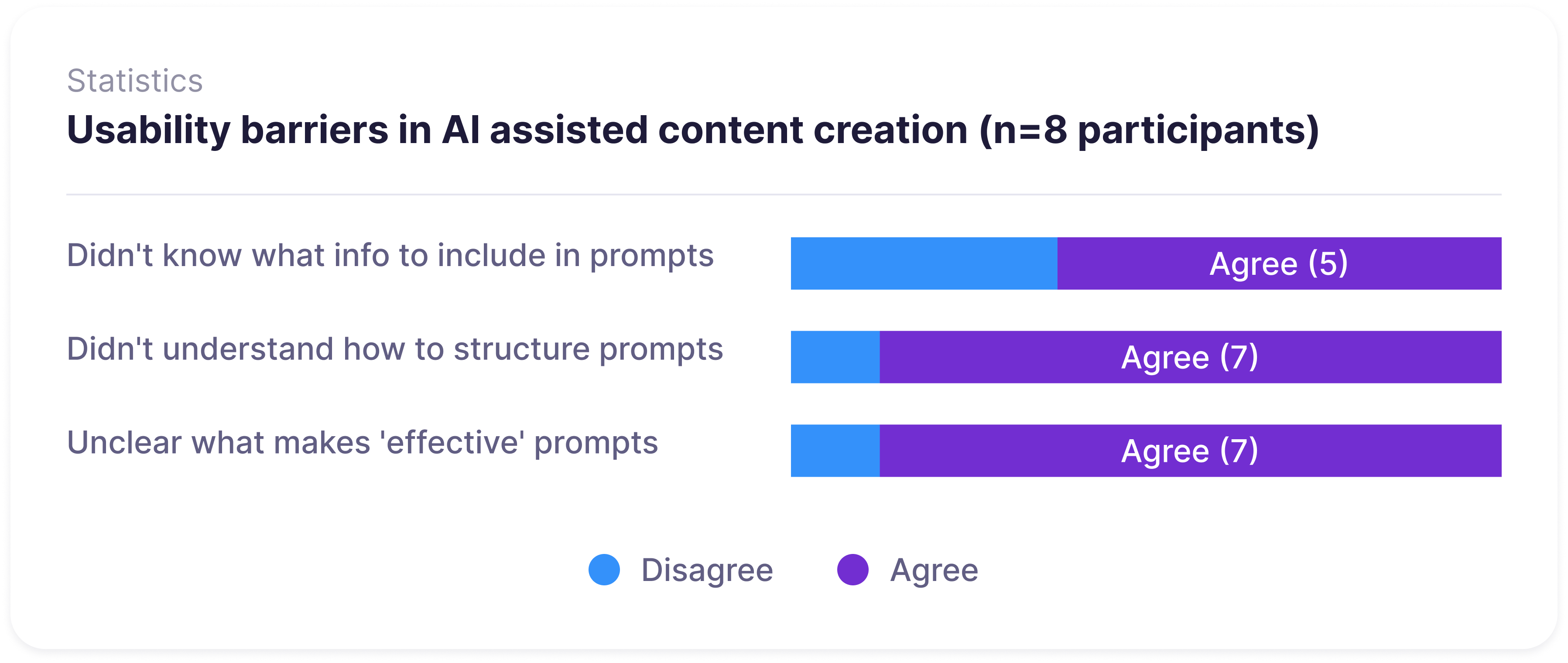

Prototype Validation

Testing the Structured Prompt Hypothesis

We believed that providing professionally-crafted prompt templates would enable non-technical sales reps to generate send-ready emails. We conducted internal testing with our engineering team, internal sales team, and several business advisors.

Testing revealed an unexpected outcome: users didn't want AI to replace their writing access. They just wanted AI to accelerate and enhance their writing.

Prototype Testing

The trade-off we missed

AI generation

Faster, scalable, data-informed BUT less precise and less natural-sounding than human writing

Human writing

Slower, manual effort BUT more authentic, contextually nuanced, and trustworthy

AI Should Work Alongside, Not Replacing User

Added a traditional Email input field alongside AI generation, creating two complementary workflows:

- AI-first: Generate draft from prompts → edit manually

- Human-first: Write draft manually → enhance with AI personalization

AI provides research context and personalization at scale, and users still have direct control for authenticity.

Updated and Finalized UI

Impact

More Than an AI Content Writer

Laxis will start with the user’s draft and enhances it by automatically adding relevant, personalized details — like addressing what the recipient cares about. Users are free to edit and customize the result to go deeper. This approach led to a 10% increase in reply rates and gave users more confidence in the quality of their outreach.